Projects & Problems

Mobility Assistant For Visually Impaired

Project Description: MAVI is an ambitious project aimed at enabling mobility for visually impaired individuals, specially in India. A major challenge in this regard is the wide range of complexities that arise in the Indian Scenario due to non-standard practices.

First Person Action Recognition Using Deep Learned Descriptors

Project Description: We focus on the problem of wearer’s action recognition in first person a.k.a. egocentric videos. This problem is more challenging than third person activity recognition due to unavailability of wearer’s pose, and sharp movements in the videos caused by the natural head motion of the wearer. Carefully crafted features based on hands and objects cues for the problem have been shown to be successful for limited targeted datasets. We propose convolutional neural networks (CNNs) for end to end learning and classification of wearer’s actions.

Face Fiducial Detection by Consensus of Exemplars

Project Description: Facial fiducial detection is a challenging problem for several reasons like varying pose, appearance, expression, partial occlusion and others. In the past, several approaches like mixture of trees , regression based methods, exemplar based methods have been proposed to tackle this challenge. In this paper, we propose an exemplar based approach to select the best solution from among outputs of regression and mixture of trees based algorithms (which we call candidate algorithms).

Fine-Tuning Human Pose Estimation in Videos

Project Description: We propose a semi-supervised self-training method for fine-tuning human pose estimations in videos that provides accurate estimations even for complex sequences. We surpass state-of-the-art on most of the datasets used and also show a gain over the baseline on our new dataset of unrestricted sports videos. The self-training model presented has two components: a static Pictorial Structure based model and a dynamic ensemble of exemplars. We present a pose quality criteria that is primarily used for batch selection and automatic parameter selection.

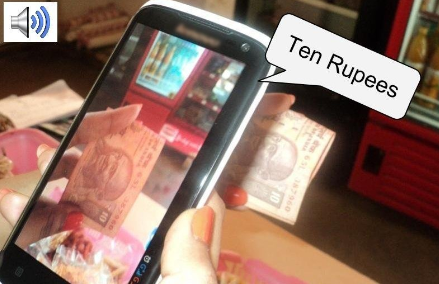

Currency Recognition on Mobile Phones

Project Description: We present an application for recognizing currency bills using computer vision techniques, that can run on a low-end smart-phone. The application runs on the device without the need for any remote server. It is intended for robust, practical use by the visually impaired. The app is designed to automatically captures the image once the user holds the phone still for around 3-4 seconds and then play an audio message which has the denomination of the bill.